After last week’s flurry of genAI news, I did not think that this week would be as busy. Silly me.

OpenAI released their newest model, GPT-4.1. It’s mostly a “better performance, same cost” model but it has a larger context window of 1 million tokens. But it’s not a reasoning model, which as you may recall from last week’s chart, reasoners are the most capable.

Turns out the chart from last week showing Google in the lead is already out of date. This week, we have two new reasoning models from OpenAI: GPT o3 and GPT o4-mini. These models have been received quite well, and appear to be game changing for several reasons:

- This is a big step towards agentic AI, as the models do more; they can perceive, they can “reason,” and they can take actions…for instance, they can not only retrieve a photo from the internet, but they can zoom in and rotate and crop it to focus on the important parts

- They incorporate a new technique called “visual reasoning,” so that the models can “think with images,” rather than just with text. This gives them SOTA performance on multimodal benchmarks.

- They demonstrate that there appear to be (at least) three axes of scaling.

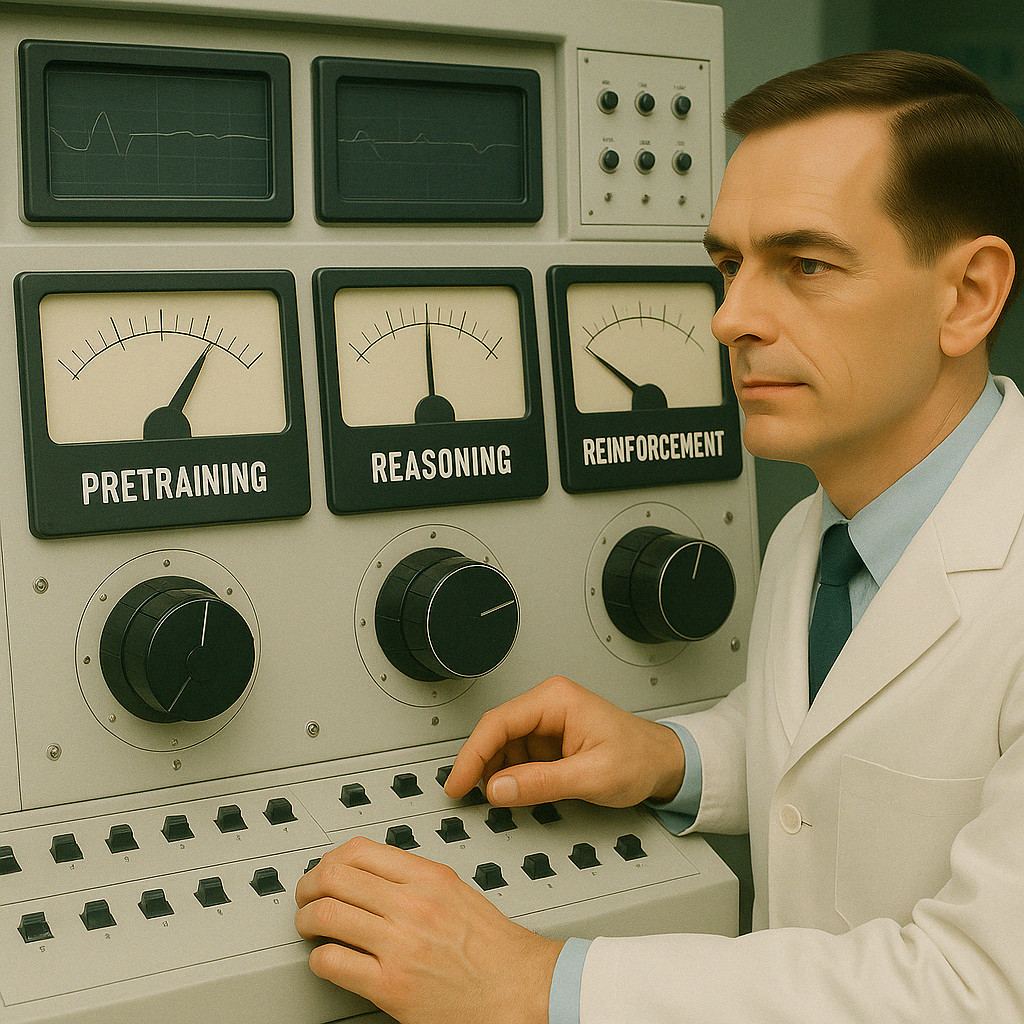

Three axes of scaling? A quick refresher. To get more scale (better results across broader scenarios) in a model, you can:

- Make the model bigger by increasing the number of parameters and the pre-training associated with it. Pre-training creates the model, by calculating what the parameter weights should be. This was the classic approach through last year. (fast in my “Thinking, Fast and Slow” post)

- Give it more time to “think.” Last year, “reasoning” models arrived, which are designed to get better results by spending more time “thinking” before they answer. Telling models to describe what they’re going to do before they do it, and to “show their work” works much like it does for us; LLMs get better results doing this, which is called test-time compute (slow in my “Thinking, Fast and Slow” post)

- Do more reinforcement learning. This isn’t a new idea, but doing a lot more of it is new. It appears that this is another axis; just like scaling pre-training and scaling test-time compute, scaling reinforcement learning also vastly improves the capabilities of the model!

Reinforcement learning occurs after pre-training (which sets the weights of the model parameters) and before test-time compute. In reinforcement learning, the model creates an output and then is given feedback on the quality of that output to learn from – was it good or bad. That feedback is used to adjust the model to get better at creating good outputs. This was used early on, and was a primary technique for GPT-3 with RLHF – Reinforcement Learning from Human Feedback. It was used extensively with DeepSeek R1. But now it appears that OpenAI has done a lot more of it, and they are saying that this is yet another dimension where more compute brings big improvements in model performance.

That’s why these models are game changers – they’re a sign that we are going to continue to see major improvements, even if we can no longer get big improvements from pretraining alone.

Alphabet soup: These companies really need to do better naming (maybe they should ask their AI for suggestions?). GPT o3 replaces GPT o1. GPT o4-mini replaces GPT o3-mini. GPT-4.1 replaces GPT-4o. At least it appears that OpenAI is dropping letters for their non-reasoning models. No longer will we have to distinguish GPT-4o from GPT o4!!!!!!

IBM announced their latest LLM (yes, a few big companies other than OpenAI/Google/Anthropic/Meta are still training their own LLMs), Granite 3.3. What’s significant about this model is that they have made a number of refinements specifically for RAG, including a query rewrite process so that the model does a better job in multi-turn conversations, and a variety of scores to indicate if the model has likely hallucinated, if the answer is true to the source, and if the citations are accurate. No idea how well they work, but those are steps that are definitely needed to ensure reliable responses in business scenarios…ESPECIALLY for agents, which because of their complexity and serial nature are more prone to making mistakes.

Cohere (a company focused on building LLMs oriented on business content rather than internet content) released an updated multimodal embedding model called Embed-4. Trained to work better on enterprise content than other models, it’s multimodal, multilingual, and has better performance and lower storage costs than their previous models.

Writer released the AI HQ, for building agents in the enterprise introducing three elements: an agent builder (in beta), an agent library, and agent observability tools (also in beta). So, a low-code, graphical agent builderthat can be used to set up an agent by putting together the three pieces they see as necessary for an agent:

- UI: Chat, dashboard, embedded, API

- Blueprint: Business logic, tools, systems, and data

- Technology: Palmyra LLMs and Knowledge Graph

The release also includes an agent library (claiming 100 prebuilt agents although the vast majority are what I would call assistants, or even just a GPT (which is an LLM and a prompt). A lot of them are pretty low-value, or (to me) seem to overcomplicate what is already a simple task. For example: an “agent” that reads your email body and brainstorms subject lines for your email. Do we really need that in an agent library?

Microsoft announced (not yet available but coming soon) computer use within Copilot, bringing Copilot up to par with other leading models.

Anthropic announced a Research mode for Claude (so now it’s on par with Deep Research offerings from other vendors) and, more significantly, an integration with Google Workspace. It’s unclear from the announcement but it appears that the integration makes it easy for users to point to document or emails for Claude to use, rather than using RAG. I say this because they say Enterprise admins can activate “Cataloging” which creates a specialized index for Claude to use so it can answer questions about your company’s content (using RAG) “without requiring you to specify exact files.” They also rolled out higher pricing tiers for super users: a $100/month and $200/month tier.

A Chinese company I’ve never heard of released version 2 of their image/video generator, and it’s impressive. Kling says they’ve figured out some new tech and it’s very impressive, it’s worth taking 2 minutes to see some of their best samples on YouTube.

Finally, an excellent article from HBR about how people are actually using generative AI. This is an update from a similar article a year ago that collects public data to find the prominence of LLM use. Since there is no perfect source, this is the best collection of data I’ve seen and the observations are striking. The #1 use case is for therapy and companionship (and you can only expect that to grow).

The chart below shows how things have changed since the past year; it’s clear that early adopters were technical, who used LLMs to help at work and get things done. Now those uses are the 4th most common; personal and professional support has shot to #1 and learning and education has climbed to #3, behind content creation at #2. Note however that this summary masks an important detail: pro-level use of LLMs for coding has increased dramatically (improving code went from #19 to #8 and generating code…ready for this…went from #47 to #5!).

My take on why does it matter, particularly for generative AI in the workplace

There are a lot of important takeaways from this past week.

- The rate of change is not slowing; if anything it’s accelerating. The models are moving into adjacent areas. First it was just language (written and speech). Now they’re showing a high level of mastery with images. And beginning to have capabilities with performing actions (computer control). This is the move into the agentic realm that everyone is talking about.

- There is still a lot of room for model improvement using current techniques – it seems likely that more pretraining for massive gains is too expensive, but there seems to be a lot of opportunity for reasoning and reinforcement learning to improve the models.

- The fundamental problems of these models such as hallucinations are not yet solved (and there is no indication that continued scaling along these three axes will solve them). These models work most of the time for most things but are not reliable and can fail in simple ways.

- There is no moat – not yet. Models with the best performance will be surpassed by another company in months if not weeks. Add-on features and capabilities created by one company will be copied by the other companies in the near future.

- We are still very early in AI adoption so we don’t know yet where it will become most prevalent. Some conclusions we can draw from the HBR article are:

- The models have become capable enough to bring major benefits to professional coders. That means if you’re a coder, you’d better be using these tools to assist you, and we can expect other technical disciplines to show significant adoption as the models improve.

- The massive jump in use of these models for 1-on-1 interactions (therapy, companionship) implies that the most frequent use in the future, especially outside of a work setting, will be relationship-oriented (in other words, an ongoing interaction with a specific model persona for a specific purpose).

- This landscape is going to change tremendously as the models become more agentic instead of just conversational, and it’s too early to predict how we will use and interact with agents.