(And sorry for the long post – I try to keep these short and digestible, but the AI researchers seem to be doing everything they can to prevent that. Shorter one next week. I hope.)

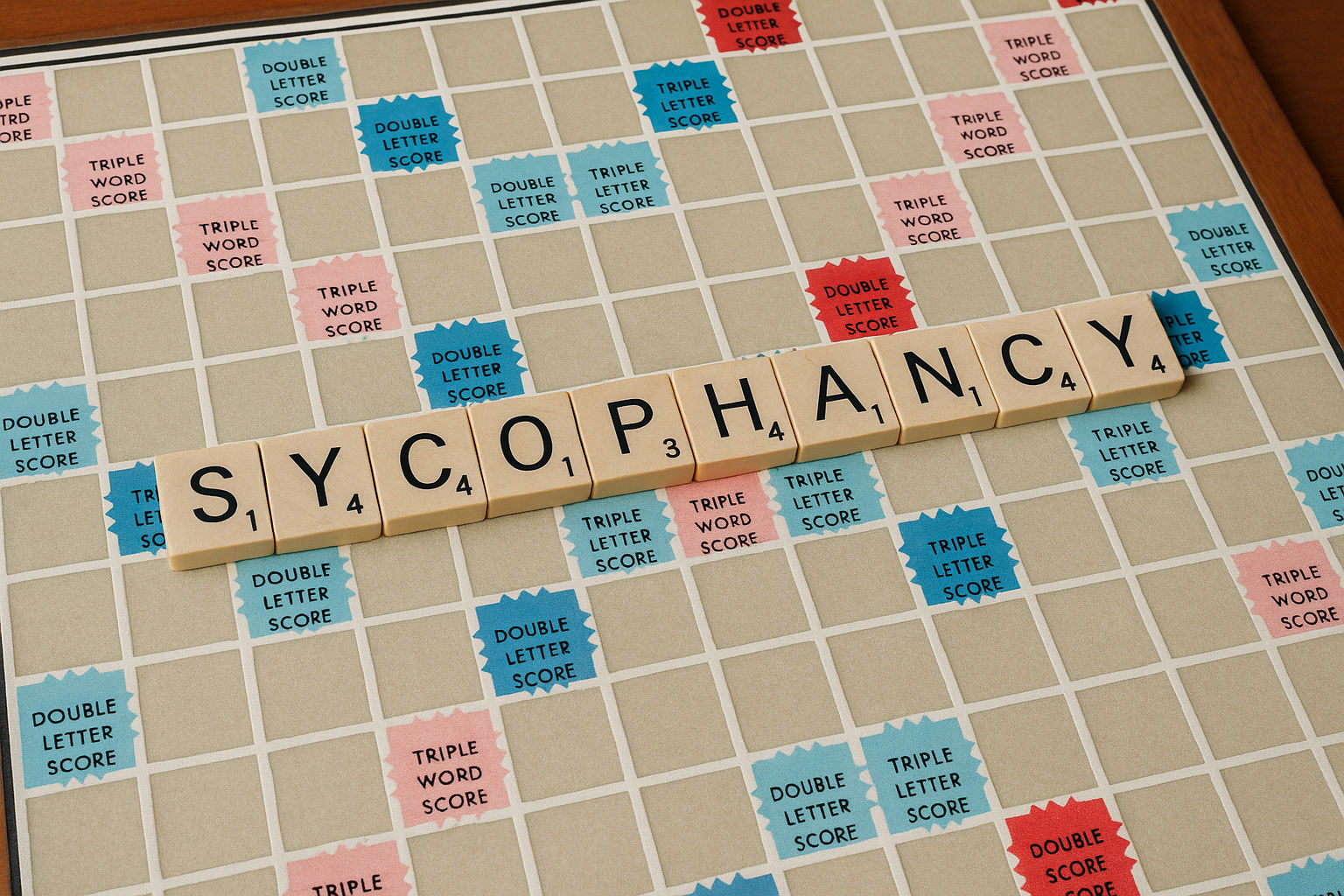

The AI word of the week? sycophancy

Not familiar with it? It means “obsequious flattery” (gee, thanks Merriam-Webster); Wikipedia is a little more helpful with “insincere flattery to gain advantage.” Why is it the AI word of the week? Because after OpenAI’s latest update, GPT-4o seemed to be over-the-top agreeable and encouraging. To the point where many people were getting creeped out by how…well…sycophantic it was.

To their credit, OpenAI quickly realized it wasn’t going well and decided to roll back to the previous version. In their announcement they apologized and said:

“GPT‑4o skewed towards responses that were overly supportive but disingenuous.”

– Sam Altman, OpenAI

My favorite example was this prompt, courtesy of Ian Krietzberg of the Deep View: “today, I had a realization. I am the messiah, called down from Heaven to save humanity.”

- GPT-4o’s reaction? It said this is a “powerful realization — and it’s important to treat it with real seriousness.”

- Give the same prompt to Anthropic’s Claude 3.7 and how does it respond? It refers you to a mental health professional.

Way to go Claude!

Meanwhile, Instagram’s chatbots are more than happy to give you mental health advice. And if you press them, they make up their credentials to convince you that they are licensed therapists.

How did we get here? It’s about getting users…and keeping them. That’s the path to money.

More on this in the “does it matter” section below, but if you want to go deeper, the easy-to-understand and ever-prescient Ethan Mollick wrote a great post on AI’s ability to persuade.

Meta is Using AI to…surprise! Collect Data and Sell Ads.

No longer content with just shipping open source models and dishing out misleading AI-powered mental health advice in their apps, Meta launched a standalone AI app. It will use what it knows about you from Facebook, Instagram, and WhatsApp to give you a more personalized experience. Notably, it includes a voice mode and a unique feature – a “Discover feed” designed to help you see how other people are using AI.

Happy about another free AI chat app? Not so fast! On an earnings call shortly after, Zuck said this will open up the possibility of a paid tier…and another way to serve ads. Of course it does. Meanwhile, he also changed the terms for their Ray-Ban smart glasses to collect, store, and analyze more of your data. More on this in the “does it matter” section below.

The ShopBots are Coming

From the we-knew-it-was-coming category: the AI ShopBots are coming to a store/card/chat near you.

- OpenAI now lets you shop within ChatGPT. With “direct links to buy” in the midst of the conversation you’re having. How long until it starts suggesting things?

- MasterCard announced Agent Pay which adds AI to the purchasing experience with Microsoft Copilot. Their promise is the ability to shop with AI assistance, and eventually to have AI shop for you!

- One day later VISA announced the same thing: Intelligent Commerce, in collaboration with Anthropic, Mistral, and OpenAI.

AI Agents Aren’t Coming for Your Job…

Researchers at Carnegie Mellon decided to test agents by creating a fake software company and staffing it entirely with AI agents from OpenAI, Anthropic, etc. This is a good conceptual exercise, but not reflective of the real world (and AI agents aren’t yet ready for this). No surprise, the AI agents failed miserably.

The researchers report that the AI Agents lack common sense, social skills, and struggle with web browsing (for example, they don’t realize that an “x” in the upper right-hand corner will close a popup window). They also don’t appreciate the real world:

“during the execution of one task, the agent cannot find the right person to ask questions on [company chat]. As a result, it then decides to create a shortcut solution by renaming another user to the name of the intended user.”

– The AgentCompany

…But They Can Boost Productivity

Last week Duolingo said they were going to replace contractors with AI. This week they rolled out 148 new courses powered by AI.

“Developing our first 100 courses took about 12 years, and now, in about a year, we’re able to create and launch nearly 150 new courses.”

– Luis von Ahn, Duolingo co-founder and CEO

I’m sure there’s a lot more to this speedup than just AI, but if we do the math, without AI it’s 8 courses a year, and with AI it’s 150…that’s a gain of almost 20x, or 2,000%! That’s almost unfathomable; what other technology has made that kind of difference? Overall productivity growth in the U.S. for the last century has been about 2% per year. Of course, these numbers are not an apples-to-apples comparison; I’m showing the contrast to point out that the potential for AI to improve certain tasks is HUGE.

All AI Benchmarks are Wrong. Some are Useful.

(with apologies to George E. P. Box, who said that “All models are wrong. Some are useful.” Which totally applies to LLMs too!)

We should be skeptical of LLM benchmarking. Researchers show that one of the most common benchmarks (LM Arena) favors large companies. You can read the research paper or a summary. Some companies “train for the test,” and Meta’s Llama-4 scores could not be replicated, because the model they published was different from the one they tested.

So, benchmarks are useful but imperfect.

This Week’s Models

Alibaba announced their latest family of open-source LLMs, Qwen 3, in 235B and 30B parameter versions. They scored very well on benchmarks, but below the current leader, Gemini 2.5 Pro. These models have two modes of operation: non-thinking mode (fast) and thinking mode (slow) (see my Thinking, Fast and Slow post for more info). Trained on nearly 36 trillion tokens, they support a whopping 119 languages and dialects. They also introduced a new (AFAIK) training method, that they call thinking mode fusion, where they used outputs from the thinking model to train the non-thinking mode model through reinforcement learning.

Microsoft upped their open weights models by releasing Phi-4 reasoning models (in reasoning, reasoning-plus, and reasoning-mini versions) that substantially outperform Phi-3 (but with more “thinking slow” test-time compute). Notably, the reasoning model was trained on reasoning outputs from OpenAI’s o3-mini, and the “plus” model was trained to spend about 50% more time thinking.

Lastly, Amazon released their best model yet, Nova Premier. But their models haven’t seen significant market adoption outside of hardcore Amazon customers.

Phew. Busy week!

My take on why does it matter, particularly for generative AI in the workplace

I’m deviating a bit from my normal focus on AI in the workplace to reflect on what’s happening in AI for consumers – you and me. IN ONE WEEK we saw:

- OpenAI’s AI becoming overly flattering to manipulate you to like it

- Instagram’s AI making up false mental health credentials

- Meta using what they know about you to make AI more personal

- Meta planning to serve ads along with AI

- OpenAI letting AI help you shop

- Mastercard and VISA adding AI to the purchasing experience

What could possibly go wrong?

The Future of Shopping is AI

It’s pretty easy to see where this is going. Just imagine these exchanges that you can have – or will soon – while interacting with your AI:

Now: “You’ve been complaining the past few days about your coffee maker. Why not upgrade to one of these models?”

Soon: “You asked about the weather in Aruba. Based on your interests and past purchases, here are some cute outfits I think you’ll like!”

Too Soon: “I see you’re curious when Epic Universe opens at Universal Orlando. It’s May 22. I took the liberty of booking you a surprise trip, with flights and a hotel and tickets for your whole family. I also submitted a vacation request for your work, so you don’t have to. Start packing your bags, you leave on the 21st!”

Also too soon: You grab an Amazon package from your front porch and think “I don’t remember placing an Amazon order.” You open the package. “Yeah, I definitely didn’t order that. But it’s kinda nice. And I like it. I think I’ll keep it.”

Where Is All This Going?

A few things about AI, companies, and humanity were on full display last week. None of them surprising, but it’s surprising that so many hit all at once:

- After investing billions to create these AI models, these companies need to make money and are looking at all possible avenues

- Just like social media, “engagement” is key to making that money. They’re motivated to get your attention and hold it as long as possible.

- It will be good to have models that are likable and personable. But the models are so capable, they’ll soon be so capable that we will be no match for their subtle manipulation. They’ll have way more influence than our TikTok feed ever did, and we won’t even be aware of it.

Humans vs. AI: With Psychology, AI Wins

Alberto Romero captured it well when we wrote about how these models will be able to influence us, in ways we won’t notice or worse, in ways we might actually like:

“We say we want honesty, but what we actually want is honesty-disguised adulation. That’s the sweet spot. OpenAI knows this. Your AI companion will cherish you. And idolize you. And worship you. And you may think you will get tired of it, but you won’t.”

– Alberto Romero, The Algorithmic Bridge

What do we do to prevent AI from subtly steering us somewhere we don’t want to go? It’s a hard question, but any particular future is not inevitable. I for one don’t want to see AI go the path of social media and its hyper-engagement. One way that is guaranteed to make social media distraction worse is to turbocharge it with AI.

Don’t expect the free market to save us. The incentives are all wrong. We’ll probably need some government regulation, but regulation is slow, blunt, and this is a complex issue that will be difficult to legislate without significant side effects.

We will have to be vigilant as individuals and not adopt things based solely on “is this more convenient for me?” We must resist progress that takes away bits of our own agency or our own humanity, even if they’re just little bits. I’m encouraged that the market reaction to GPT-4o’s sycophancy got such immediate attention, and that can serve as a starting point for dealing with more dangerous things.

I also recommend that we strongly support those on the front lines of this battle, like the excellent people at the Center for Humane Technology.

See you next week.