Glean held their winter announcement event and showed some really cool stuff around agents (warning: the definition of an AI agent is still whatever you want it to be). The had a very nice demo, showing how you can build an agent visually by dragging and dropping…and even by telling an LLM “I need an agent that does so-and-so” and then the LLM builds a first draft of the agent, which you can then edit as needed. This is cool, HOWEVER…

- Their LLM-to-agent prompt in the demo was very prescriptive (it was basically a step-by-step guide), it’s unclear how well this will translate to real-world use for someone who wants to build an agent with natural language.

- Their visual workflow editor appears limited, in that it’s not dynamic. The only part the LLM plans is its creation, it doesn’t do any dynamic planning at execution (one of the two requirements I have for what makes an AI agentic). So if the workflow is fixed, I would call this an assistant rather than an agent. There are many work processes that will follow a pre-set, prescribed workflow and an assistant is sufficient; but a lot of the excitement is for agents that plan their own steps at the time of execution.

- Their examples were quite simplistic. Does my manager really want me to send her a list of all of my open Jira tickets? Especially if she can just go to Jira and filter all of the open tickets that are assigned to me? We have a ways to go to figure out what agents are capable of within the enterprise.

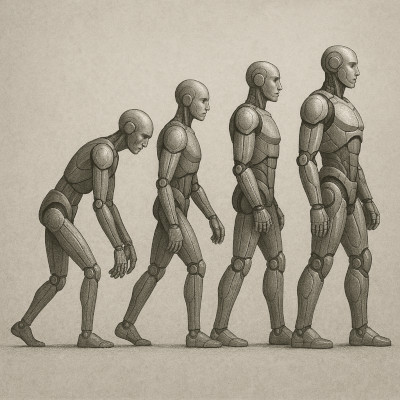

Sam Altman gave a update on OpenAI’s roadmap on X. And it’s super interesting because he says that they will ship GPT-4.5 next (not GPT-5!). Instead, they will “release GPT-5 as a system that integrates a lot of our technology.” In other words, GPT-5 will be the collection of all of their models, brokered by a “routing agent” to choose which model to use based on the nature of the request. This is further evidence that the scaling “law” is no longer a law, and as we continue to scale models (larger models, more compute, more data), we’re getting smaller and smaller improvements – thus no GPT-5 model. DeepSeek (and others) have shown that future gains will primarily be driven by different training techniques rather than just more of it. Or, now that we have another dimension to improve models, by scaling the “reasoning” (amount of time thinking, or test-time compute) that the model does at runtime.

Finally, although it’s from last month instead of this week, a recommendation: an article worth reading about how to think about AI’s impact in the short term & long term.

My take on why does it matter, particularly for generative AI in the workplace

Glean showed a vision of self-service agents where everybody can build their own agent. I think that makes sense, but when it comes to using them to populate a library of agents that anyone can use, we must be careful to learn lessons from the past: it might not be a good idea. Companies tried that model with business intelligence (BI), and it usually resulted in a massive repository of poorly documented and frequently redundant (and maybe even noncompliant) items. Since it was impossible for employees to find what they needed, they usually just created a new one…contributing to the pile of options and making the situation worse.

If you haven’t experienced that, consider OpenAI’s GPT Store. How do you choose the right “resume writer” from hundreds (or maybe thousands) of options? I believe a more measured approach is required and a library of much fewer, more capable, more versatile agents is what businesses need.