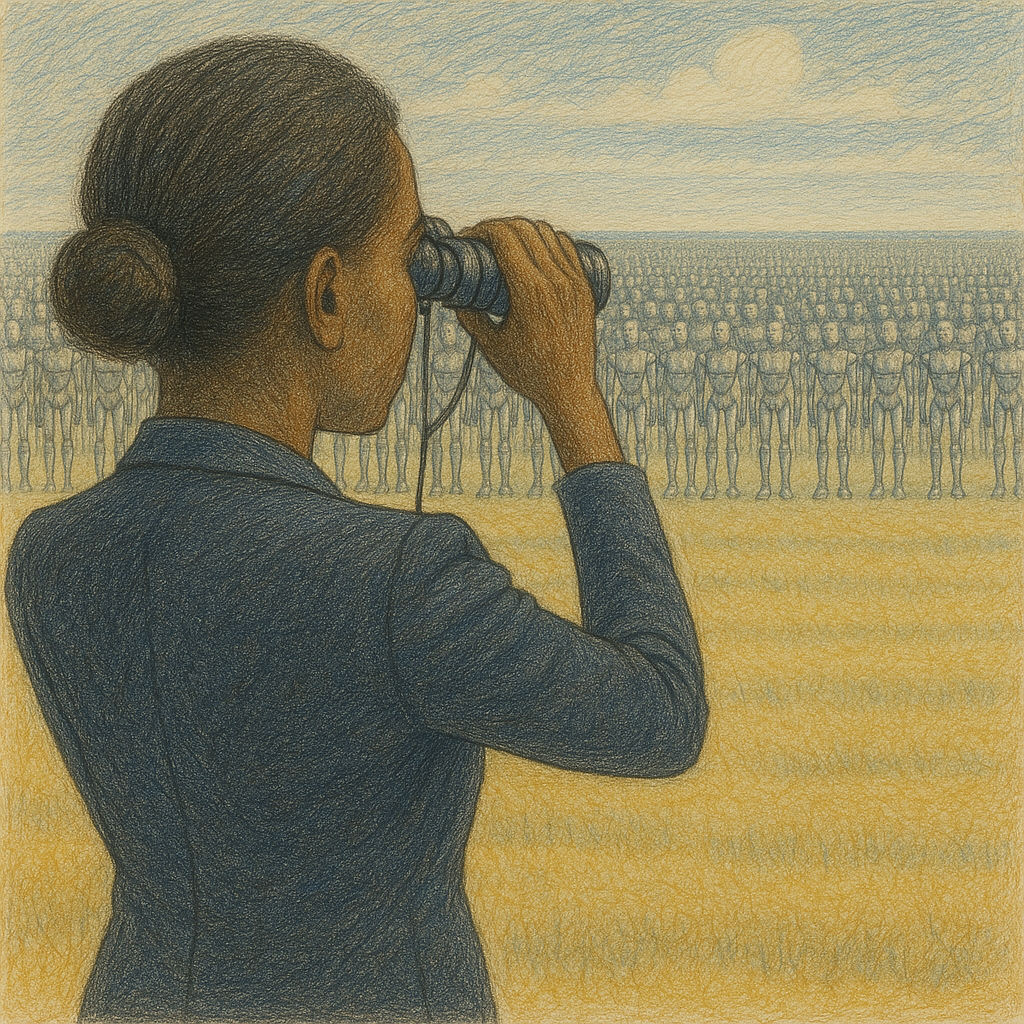

William Gibson’s quote “the future is already here, it’s just not very evenly distributed” is partially true of AI Agents, since AI agents have been successfully deployed for limited, constrained applications. But the future of more general, ubiquitous AI agents is still just a vision, something that hasn’t yet materialized. I think it’s going to be a while.

The past week the news seemed to shift away from specific technology announcements and towards the bigger picture: deploying AI agents in the workplace. To me this was a welcome shift away from the hype and towards practical, here’s-where-we-are, and here’s-how-we-get-to-the-next-stage conversation. Let’s dive in.

AI Agents: Hype or Reality? Yes.

Yoav Shoham, the founder of AI21 labs, says it better than I can in this article about AI Agents: Don’t let hype about AI Agents get ahead of reality. The summary is that AI Agents will be transformative, but not yet…we have a few things to work out before we’ll be able to deploy them reliably and at scale. He does a great job explaining those things in a straightforward way.

“There is enormous potential for this technology, but only if we deploy it responsibly.”

– Yoav Shoham, Don’t let hype about AI Agents get ahead of reality

AI-First Companies will Win the Future

Boston Consulting Group sees AI use as critical to future corporate success and provide a 15-slide presentation giving guidance for adapting to become AI-First.

They outline five business changes necessary to enter the AI age:

- Capitalize on what is uniquely yours to build a bigger moat

- Reshape your P&L to focus on AI-driven improvements and innovation

- Build a tech foundation that allows for decentralized AI deployments

- Create an operating model that is AI-first (ask “can AI do this?” at every step)

- Focus on lean teams with specialized skills that are highly valuable (letting AI handle as much non-specialized work as it can)

And specify five steps to adopt AI:

- Create an AI agenda that is business-led, not technology-led

- Embrace AI in your daily work

- Anticipate the impact on your workforce to adapt and reskill talent

- Prove AI success in smaller, high-value initiatives and then scale

- Reallocate resources to fund what is working – it takes real investment

If this interests you, here is a link to their full presentation.

Scale Generative AI for Business Transformation

CapGemini (with the Everest Group) released a 20-page report looking at the current state of enterprise AI adoption (83% have deployed or are actively seeking to deploy it!) with a guide to how you can be a part of it. They recommend five steps for an enterprise-wide AI strategy:

- Align AI strategy with business objectives (not technical experimentation)

- Identify and prioritize use cases (high-value, low-risk, smaller ones first)

- Define measurable goals and KPIs (so you can measure success and see where the value is)

- Build strong AI leadership (without good leadership, change management fails because people revert to doing what they’ve always done)

- Define budgeting and funding (because it will take an investment)

They also provide guidance for an operating model for AI along with recommendations for people, technology, and governance for responsible AI. If this interests you, here is a link to their full report.

Enterprise Agentic AI: Lessons from the Frontlines

In a brief but very potent blog post, Deep Analysis summarizes lessons learned from early adopters of agentic AI.

- You need a business strategy, not an AI strategy (AI is a business transformation tool)

- Don’t start with AI. Start with business problems.

- Before deploying AI agents, audit your data. It’s the foundation.

- Start small. Prove value in one area, then scale.

- Treat AI adoption like organization change, not just a software rollout.

Good advice, from real-world experience. If you’re entering into the agentic AI arena, Alan’s post is a must-read.

GenAI > TL;DR ?

If you’re not familiar with TL;DR it’s become the fashionable way to say “summary.” It officially stands for Too Long; Didn’t Read but I always say it really stands for “Too Lazy; Didn’t Read” because if it’s long (at least if it’s well-written), it’s long for a reason. The world isn’t black and white, and virtually nothing summarizes well into a few sentences. Every time we summarize something we lose detail, nuance, and richness.

Yet summarization is one of the things that has been held out as a huge strength of generative AI. It can be a big time-saver, because if an AI can do all the reading for us and summarize the key points, then we can decide whether we need to go in-depth and read the original source to get the full story, or skip it because our time is better spent elsewhere.

We know generative AI summaries aren’t perfect and sometimes have hallucinations, but aside from the occasional hiccup, they seemed very good at this. However, a study released in April (but just making the rounds now) indicates that when AI is used to summarize scientific literature, the summaries are too broad.

The risk is that over-generalization can lead to conclusions that are not supported by the specific evidence. Note that this is different from hallucinations (stating something that wasn’t found in the original text) – this indicates that the LLM does not properly interpret and preserve the context of the information.

They tested 10 of the most prominent models and found:

- a strong bias towards overgeneralizing scientific conclusions

- LLM summaries were nearly five times more likely to contain broad generalizations than human-generated summaries

- newer models tended to perform worse in generalization accuracy than earlier ones

If you’ve been a reader of my blog, you shouldn’t be surprised. These models don’t truly understand what they’re reading; they’re identifying patterns and predicting likely follow-up patterns. So it’s not surprising that they struggle with staying true to the original meaning of the text. Two of the biggest challenges were:

- maintaining tense (a frequent error was switching past tense to present and vice versa)

- applying proper scope (a frequent error was summarizing something that was true in a specific situation as something that was generically true, and vice versa)

And of course, not all models are the same. Anthropic’s Claude was markedly better than the otherstested (models from OpenAI, Meta, and DeepSeek, but they did not test models from Google), with the fewest overgeneralizations, the fewest specific algorithmic overgeneralizations, the highest consistency across prompts, and the highest consistency between old and newer models. Score another victory for Anthropic’s Constitutional AI approach.

“Claude was the most faithful in text summarization.”

– Generalization Bias in LLM Summarization of Scientific Research

My take on why does it matter, particularly for generative AI in the workplace

I’ve shared some of my perspective in the earlier sections of this post, so I will summarize what matters here:

- It’s still early; AI Agents are over-hyped today but will bring value tomorrow. AI Agents are going to bring huge benefits, to individuals and enterprises alike. While some companies are seeing success in narrow areas, it’s going to take a while before AI agents can be used broadly. They suffer from a number of shortcomings (hallucinations, overgeneralization, unreliability, overconfidence) that we don’t yet know how to solve or control (or even detect reliably!).

- Enterprises need an AI strategy to guide their adoption of generative AI and, eventually, agentic AI or they risk getting left behind. It’s still new, but enough companies are doing this that there is good guidance available from organizations like BCG, McKinsey, and CapGemini.

- LLMs are incredibly valuable when used for the right things, but they aren’t reliable and we don’t yet know how to make them reliable. We’re gonna have to figure out how to address some of their shortcomings, or at least identify when they mess up, to be able to use them in high-stakes environments. We can’t even trust them to convey an accurate summary of nuanced information.

- Or can we? As I discussed last week, which model you use, what it was trained on, and how it was trained, matters. Anthropic’s Claude consistently comes out better than the others in terms of safety and truth. It’s still not perfect (see last week’s post about how all LLMs will resort to blackmail when cornered) but it instills hope that we may be able to find a path to safe and reliable generative AI.

“The problem isn’t refusal, it’s false fluency. Earlier models often declined to summarize unclear information. Newer models provide confident outputs that feel complete but carry subtle shifts in meaning. LLMs trained on simplified science journalism and press releases prioritize clarity over accuracy, resulting in polished but less trustworthy summaries that often overlook precise research…Users want confidence, not caveats. The market rewards models that sound authoritative.”

– The Deep View, ChatGPT 5x Worse than Humans at Summarizing