A Chinese heavyweight and a newcomer release new open models that compete with the best that the U.S. has to offer. But first, a look at an AI agent for the enterprise, a few weeks after OpenAI released their public AI agent…

The First AI Agent for the Enterprise?

Writer released what they are calling the first general-purpose AI agent for the enterprise. While their detailed post carries a lot of hyberbole (not many companies talk about being on path to AGI, except for the LLM vendors) their agent performs well on the benchmarks. They didn’t compare it to OpenAI’s agent (released only a few weeks ago) but they were able to beat Manus which was perhaps the first general-purpose AI agent.

Source: https://writer.com/engineering/writer-action-agent/

What is ChatGPT Used For?

OpenAI released their first economic impact analysis based on use of ChatGPT. Aside from the almost complete lack of quantitative economic impact data and their self-hype about how great OpenAI is, they are able to offer some insights into how it’s being used:

- 20% for learning and upskilling

- 18% for writing and communication

- 7% for programming, data science, and math

- 5% for design and creative ideation

- 4% for business analytics

- 2% for translation

You may have noticed that’s only 56% of use. What about the rest? This reports usage of their entire user base, not just business use. There’s probably a lot of use that won’t impact economic productivity. Things like conversation/companionship, cheating on homework, and general messing around.

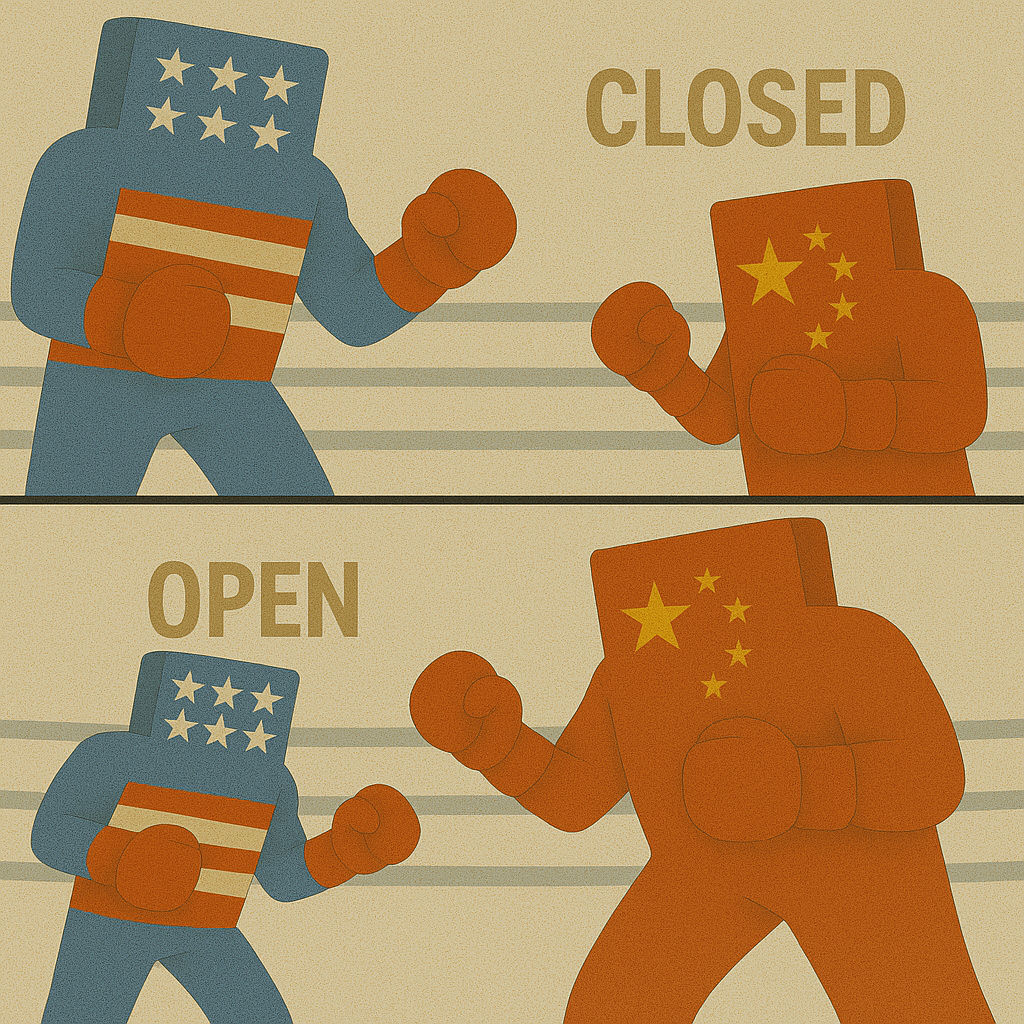

More Open Chinese Models

Chinese startup Z.ai released GLM-4.5, a model that shows strong ability in all the exciting places: reasoning, coding, and agentic ability. What’s more, it does this at a small fraction of the cost of DeepSeek. DeepSeek costs 7.5x more!

And Chinese behemoth Alibaba topped off their series of Qwen3 releases with a FOURTH updated version of their Qwen model, this time a Qwen3 thinking (reasoning) model. So in a matter of weeks they released near state-of-the-art open-weight models: a standard model, a coding model, a translation model, and a reasoning model.

So in the last few weeks from China we have:

- An open-weights model that is (arguably) the best at agentic AI (Kimi-K2).

- An open-weights model that is (arguably) the best non-reasoning LLM (Qwen3-Instruct).

- An open-weights model that is (by some benchmarks) the best reasoning model (Qwen3-thinking)

- An open-weights model that almost as good as DeepSeek, but at 1/7th the cost (GLM-4.5)

“Something that has become undeniable this month is that the best available open weight models now come from the Chinese AI labs.”

– Simon Willison

Open weight models have advantages. They’re:

- Cheap (the only cost is compute infrastructure)

- Completely private & data protection (you can run it on your servers)

- Consistent (you can use it as long as you want because it won’t be discontinued)

But…would you trust a model from China? We know that such models don’t like to talk about things that the Chinese Communist Party doesn’t want them to talk about. That may not matter much in a business/RAG setting where they’re supposed to talk about company stuff, but we also know that different models exhibit different personalities:

“Google’s Gemini models proved strategically ruthless, exploiting cooperative opponents and retaliating against defectors, while OpenAI’s models remained highly cooperative, a trait that proved catastrophic in hostile environments. Anthropic’s Claude emerged as the most forgiving reciprocator, showing remarkable willingness to restore cooperation…”

– Strategic Intelligence in LLMs

Keeping this in mind, are Chinese models really viable for use by US companies?

My take on why does it matter, particularly for generative AI in the workplace

Writer’s Agent

Writer’s workplace AI agent’s benchmark scores are impressive. But will it be practical for real-world use cases in the enterprise? It will take time to tell. They’re rolling it out in beta to existing customers to get rapid feedback and iterate (good move!).

There are (at least) two obstacles. One, will a general-purpose agent provide real value? Most valuable use cases in the enterprise require at least some specificity to the domain or task at hand, and a general agent isn’t likely to be able to add much value. Two, not many AI agents have been deployed at companies yet because the LLM error rates are high, and it’s hard to know when the agent succeeds or fails.

Let’s look at the GAIA benchmark. Writer claims 88% success for level 1 (the easiest) tasks. In what business application is a 10% failure rate acceptable? At level 3 (the hardest tasks) the success rate falls to 61%. 61% is slightly better than Manus (58%) and admittedly these tasks are pretty hard…but if a coworker only gets something right 2 out of 3 times, it’s not going to be long before I’m going to stop asking the agent to do it.

“AI “Agents” will be endlessly hyped throughout 2025 but far from reliable, except possibly in very narrow use cases.”

– Gary Marcus, 25 AI Predictions for 2025

I agree with Dr. Marcus, but not because AI agents are no good, but because it’s going to take more time, and the more generalized the agent, the longer it’s likely to take. Writer’s agent is going to keep getting better (maybe very quickly). More importantly, it can act as a foundation for developing other, more specialized agents that will bring a lot of value. So I’ll be keeping an eye on this closely.

“I don’t expect agents to go away; eventually AI agents will be among the biggest time-savers humanity has ever known.”

– Gary Marcus, AI Agents have, so far, mostly been a dud

OpenAI’s Report

I’m perplexed by this “report.” I don’t know why they released such a lightweight piece with such a strong title. The absence of real “economic impact” numbers is glaring. The closest they got was:

- “the pace of AI adoption suggests significant economic impact” and

- “nearly all forecasts suggest that AI will increase productivity…[and its] impact on economic growth would be meaningful.”

That is WEAK. If you’re going to call it “Unlocking Economic Opportunity,” you should say something about real economic impact rather than random stats and vague speculation. Maybe they felt they needed to follow Anthropic, which in February announced the Anthropic Economic Index to study AI’s impact over time (but it’s mostly usage patterns, they need more time to measure economic trends and impact).

Don’t get me wrong. I think AI will have a huge economic impact. We’re just not ready to measure it yet, because we’re just getting started!

China’s Open Models

China is ahead in open models. The US is ahead in closed models (and GPT-5 is reportedly coming any day now, potentially expanding that lead).

Significantly, Z.ai (at that time known as Zhipu) was blacklisted by the US for chip exports in January, before Trump took office. That they produced a state-of-the-art model that is extremely cost-effective after 6 months on the blacklist is an indication that the chip sanctions aren’t really working. Or, if they are working, the tech/algorithms are moving fast enough to overcome the sanctions.

There are many questions here. Most notably, will the US or China win? Will open-weights or closed models win? Will there even be winners in the end? Stay tuned.