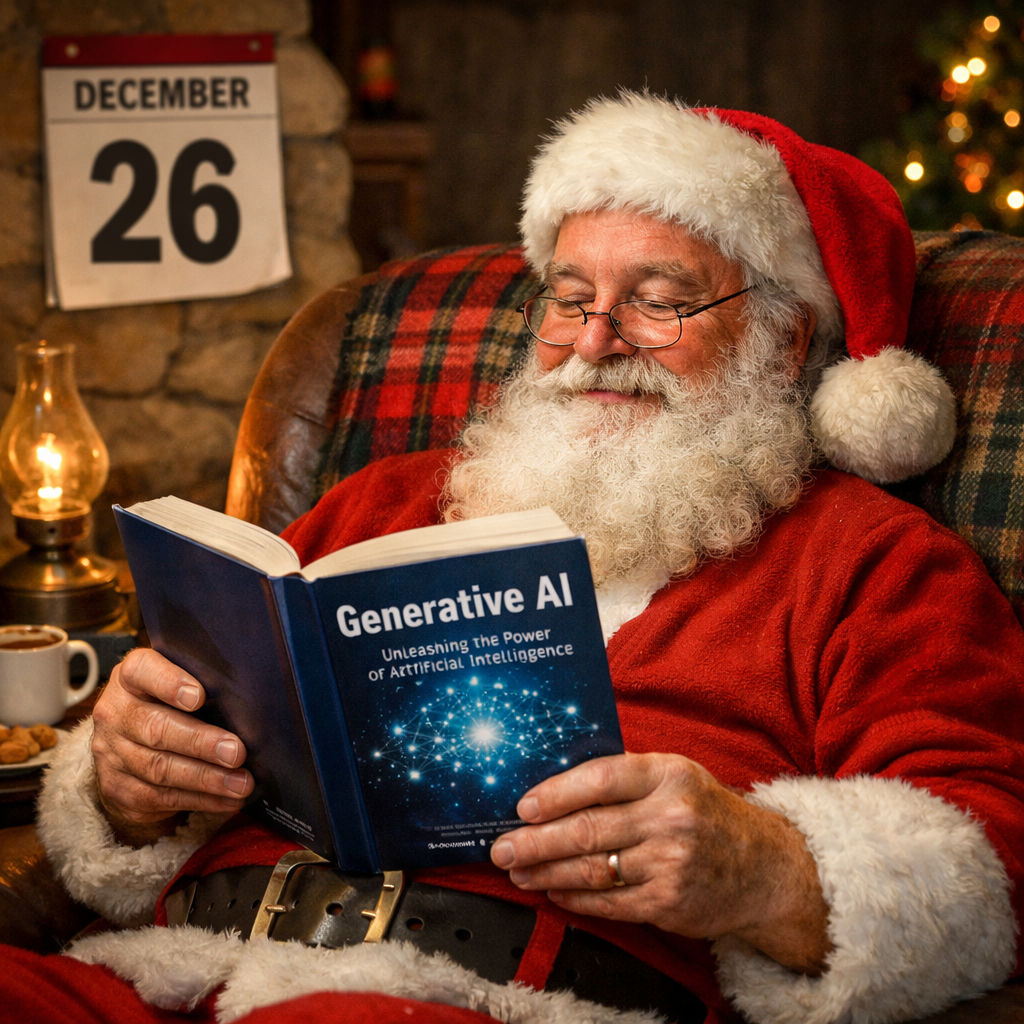

I read a lot about AI (probably too much). Much of it is out of date in a few months (or weeks). But some content retains its importance over time.

Here are some of the most important pieces on AI over the last year or so. Read them over the break – or when you come back in January. It was hard to pick just a few, and there are other authors (Raphaëlle d’Ornano, Alberto Romero, Gary Marcus, and many others) who also generate fantastic content, but I really wanted to keep the list concise.

They’re in order from shortest to longest, so you can choose a piece that fits the time you have available.

- The Bitter Lesson

- We must build AI for people, not to be a person

- AI eats the world

- AI as Normal Technology

- Co-Intelligence

The Bitter Lesson (Richard Sutton)

This 1 ½ page essay is worth reading to understand the trend that, as more computing power has become available to power AI, human guidance has become less important. No guarantee that this trend will continue, but it has kind of been a Moore’s Law of AI.

We must build AI for people, not to be a person (Mustafa Suleyman)

In a 4,600-word essay on his blog, the CEO of Microsoft’s AI group points out the tendency of people to anthropomorphize AI, and how that is not a good thing. AI is not conscious; but it can appear that way…and it’s only going to get more convincing as time goes on. He calls this seemingly-conscious AI, describes its dangers, and explains how we should work against the false conclusion that it is conscious…that it is, in effect, a person.

Because it’s not.

AI eats the world (Benedict Evans)

Every year (or twice a year now), this tech analyst (formerly with a16z) creates a comprehensive and detailed data-driven presentation about the state of technology. The current (November) edition is 88 slides long. He’s a master at big-picture perspective, challenging the conventional dialogue, and finding the right questions to ask. He’ll readily admit when he doesn’t have the answers (something many journalists could learn from) and I guarantee he’ll get you thinking about AI in a new way. Some gems:

If models are near commodities, and we don’t know the right product, where will the value be?

“Why did our AI pilot fail?” is a CTO question, not an AI question.

Remember how early this is, and how hard it is to know how the new thing will work.

AI as Normal Technology (Arvind Narayanan and Sayash Kapoor)

This pair of Princeton professors make a very compelling argument that, when the dust settles, AI might just be…normal. What do they mean by that? It won’t be an AGI that takes over the world and kills us all. But it won’t be useless either. It will be hugely transformative but slowly, because unlike technology, adoption depends on things that take much more time. As AI is deployed and as we adjust, eventually it will become normal, in the same way that electricity is normal today.

The 15,000+ word paper is available in multiple forms: a paper in PDF, a paper in HTML and a blog post. This piece caught so much attention and stirred up so much debate that the authors published a guide to further clarify some of their points.

Co-Intelligence (Ethan Mollick)

Writing an entire book about generative AI is an almost impossible task, because AI changes so quickly that by the time the book is published, it’s already out of date. But Ethan Mollick did a heroic job writing a book that conveys the principles so well that I still recommend it now, even though it’s two years old! Sure, the capabilities of AI have advanced by leaps and bounds since it was written, but it’s a very approachable book that addressees the core concepts and implications of generative AI. This Wharton professor also writes an excellent blog, called One Useful Thing.

Leave a Reply

You must be logged in to post a comment.